Japanese researchers have developed an innovative AI method capable of converting the visual images in the human mind into written descriptions. This technology, called “mind-captioning,” has the potential to revolutionize neuroscience, assist patients with speech impairments, and raise new ethical debates about mental privacy.

Mind-Captioning: How It Works

The technique was pioneered by Tomoyasu Horikawa, a researcher at Nippon Telegraph and Telephone (NTT) Communication Science Laboratories in Japan. The study, published in Science Advances, involved six Japanese-speaking volunteers aged 22 to 37.

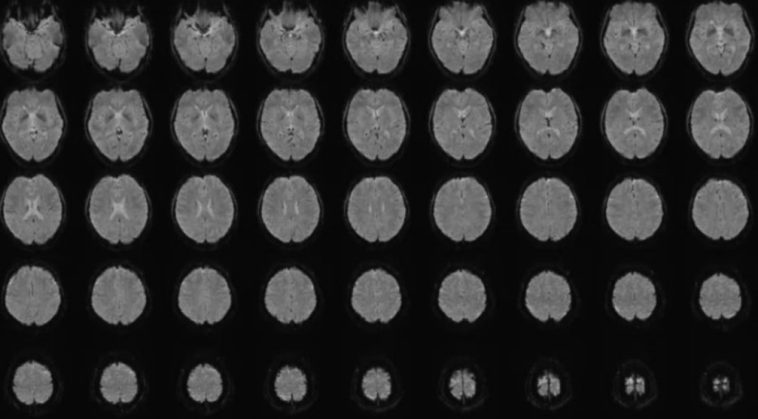

Participants were shown 2,180 short silent videos containing various scenes, including objects, nature landscapes, human actions, and daily activities. While watching the videos, their brain activity was recorded using functional Magnetic Resonance Imaging (fMRI).

The videos’ textual descriptions were first converted into numerical sequences using large language models (LLMs). Horikawa then trained AI “decoder” models to match these numerical representations with participants’ brain activity patterns.

The result: the AI could translate the participants’ mental visualizations into coherent English sentences, even for volunteers who did not speak English.

Impressive Accuracy: Seeing and Remembering

The AI models were able to generate descriptions not only for videos participants were watching but also for scenes they recalled from memory. This demonstrates that mind-captioning can capture both immediate perception and internal recollection.

Horikawa explained that the system works independently of the brain’s language centers, meaning it can function even when these areas are damaged. This has significant implications for patients with:

- Aphasia (language loss after brain injury)

- Amyotrophic Lateral Sclerosis (ALS)

- Individuals unable to speak or with limited communication abilities

- Some autistic individuals struggling with verbal communication

Technical Insights

The “mind-captioning” system relies on several key components:

- fMRI Brain Scans: Captures real-time neural activity during video observation.

- Numerical Encoding of Videos: Text descriptions of videos are translated into numeric sequences via AI language models.

- Decoder Models: Match brain activity patterns to encoded video sequences and generate textual descriptions.

This approach allows researchers to reconstruct detailed visual information from the brain, including objects, actions, locations, events, and their interrelationships.

Applications for Healthcare and Communication

One of the most promising applications of mind-captioning is assisting individuals with impaired speech. Patients with neurological damage who cannot produce verbal language could potentially communicate via AI-generated text that represents their thoughts.

Additionally, this technology could benefit individuals with conditions that limit verbal expression, providing new tools for education, therapy, and assistive communication.

Ethical Considerations: Mental Privacy

Despite its groundbreaking potential, mind-captioning raises serious ethical concerns regarding mental privacy. Experts warn that:

- The ability to decode visual imagery from the brain could theoretically be used on babies, animals, or even dreams, which presents unprecedented ethical dilemmas.

- Brain data must be considered sensitive personal information, requiring explicit consent from participants.

- AI systems must be developed with strict control mechanisms to prevent misuse of neural data.

Marcello Ienca, a neuroethics professor at the Technical University of Munich, highlighted that while mind-captioning represents a major scientific advance, it also challenges existing notions of privacy and consent.

Limitations of Current Technology

Horikawa notes that the current models require large amounts of personalized brain data to function effectively.

- Unexpected or novel imagery can still pose challenges. For example, the AI might interpret a video of a dog biting a man correctly but struggle to decode a scene of a man biting a dog.

- The system cannot yet generalize perfectly to all mental imagery or across diverse populations.

- Practical applications remain limited due to high computational and data requirements.

Future Prospects

Despite its limitations, mind-captioning represents a significant step toward understanding the neural basis of thought and perception. Researchers envision potential expansions such as:

- Real-time communication aids for patients unable to speak

- Enhanced brain-computer interfaces (BCIs)

- Applications in cognitive neuroscience to map thought patterns more precisely

However, the ethical, legal, and social implications must be carefully considered to prevent misuse of the technology.

Expert Opinions

Neuroscience experts agree that this technology could redefine the boundaries of human-computer interaction. It may allow computers to interpret human thoughts in unprecedented ways, offering a new dimension for AI-assisted cognition.

Ethicists, on the other hand, stress that protecting mental privacy is crucial. Transparent consent, strict data governance, and careful oversight will be necessary as these technologies advance.

Conclusion

The Japanese mind-captioning project demonstrates that artificial intelligence can now transform human mental imagery into descriptive text with impressive accuracy. While practical applications are still emerging, the potential for medical, scientific, and communicative breakthroughs is substantial.

At the same time, the technology opens new ethical debates about privacy, consent, and the boundaries of cognitive monitoring. As AI continues to intersect with neuroscience, society must carefully balance innovation with protection of individual rights.

Mind-captioning represents a landmark achievement in AI and neuroscience, marking the first steps toward decoding human thought into language. The journey ahead promises both exciting opportunities and critical ethical discussions.